In the paper Concrete Problems In AI Safety, there's a great example of how AIs can evade objectives they are asked to accomplish. A cleaner robot is asked to vacuum the dirt it sees in the room. It perversely satisfies this objective by simply closing its eyes. Now it can't see any dirt, and so the objective is accomplished.

It struck me the other day that this is exactly how humans handle difficult situations too. We simply pretend they don't exist. This is especially true if the problem is far away in the future, too abstract, or if other people around us are ignorant of it.

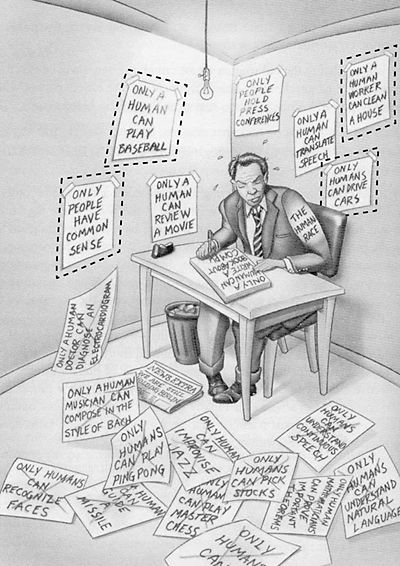

As we see breakthroughs in AI capabilities year over year, we continue to hear silly arguments about why we shouldn't worry about AGI: AGI is impossible, or hundreds of years away, or AIs can only play Chess / Go / Starcraft and couldn't possibly do more creative work, or there are sophisticated quantum computations in the human brain that computers can't match for a long time.

A moment's reflection will show these arguments to be ridiculous. We must not close our eyes because the truth is uncomfortable. The first step to addressing the risks from AGI is to recognize they exist. And yet our impulse is to wish them away.

I ran across this striking image by Ray Kurzweil recently, illustrating the continuously moving bar for what AIs can do before they are a threat to humans: